Competitive programming involves solving complex problems in a limited time frame, testing critical thinking skills, logic and understanding of algorithms, coding and natural language. Until recently, this task was considered particularly difficult for AI systems. If AlphaCode was the first of these to compete with the median participant, its successor, AlphaCode 2, powered Gemini Proshowed significantly superior performance.

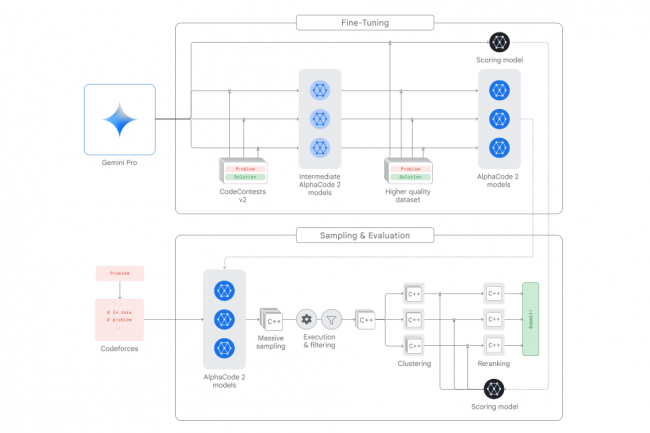

AlphaCode 2 uses powerful language models and a sophisticated search and rearrangement engine designed specifically for competitive programming. Its main elements include a family of policy models for generating code samples, a sampling mechanism that favors broad diversity, a filtering process that eliminates inconsistent samples, a clustering algorithm that avoids redundancies, and a scoring model for selecting the best candidate from the top 10 groups.

Credits Google DeepMind: How AlphaCode 2 works

To improve these components, the system relies on Gemini Pro and applies two rounds of fine-tuning: first to the dataset, CodeContests, which contains 15 thousand problems and 30 million samples of human code, then to the “high quality” dataset, according technical Application from Google.

Aphacode2 vs. AlphaCode

Deepmind evaluated and validated AlphaCode’s performance during a competition on Codeforces, a popular platform where tens of thousands of developers from around the world come to test their coding skills. He faced 5,000 competitors in 10 events, scored 54.3% and placed about the level of the average competitor.

AlphaCode 2 was tested on 77 problems in 12 competitions of the same platform, solving 43% of them, 1.7 times more than its predecessor. Measured against more than 8,000 developers, he outperformed more than 85% of them, positioning himself between the ‘Expert’ and ‘Candidate Master’ categories on Codeforces. He was better than 99.5% of programmers on two problems.

In addition, AlphaCode 2 is 10,000 times more sample efficient than AlphaCode: the second version only requires about a hundred samples to reach the performance level of the first with a million samples.

For the DeepMind team:

“Despite AlphaCode 2’s impressive results, there is still much work to be done before we can have systems that can reliably achieve the performance of the best human coders. Our system requires a lot of trial and error, and remains too expensive to operate on a large scale. Additionally, it relies heavily on the ability to filter out obviously bad code samples.